In the world of generative AI, Retrieval-Augmented Generation (RAG) has established itself as a powerful method to provide Large Language Models (LLMs) with up-to-date and domain-specific information. But how can such a system be implemented seamlessly in practice?

The answer: swoox.io – a modern automation and integration platform that makes RAG projects efficient, scalable, and maintainable.

The Use Case: RAG with Firecrawler, swoox.io, and Ollama

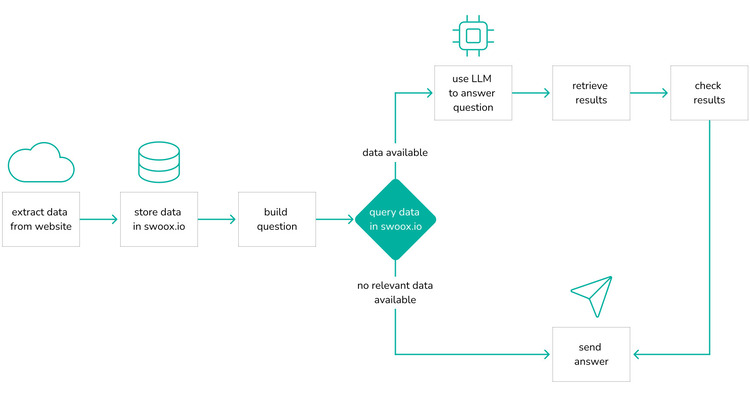

In a real-world project, a complete Retrieval-Augmented Generation workflow was implemented using the following components:

- Data acquisition with Firecrawler

The website swoox.io was crawled using Firecrawler. Relevant content was extracted and stored in an internal data repository – forming the basis for the retrieval part of the RAG system. - Automated data processing with swoox.io

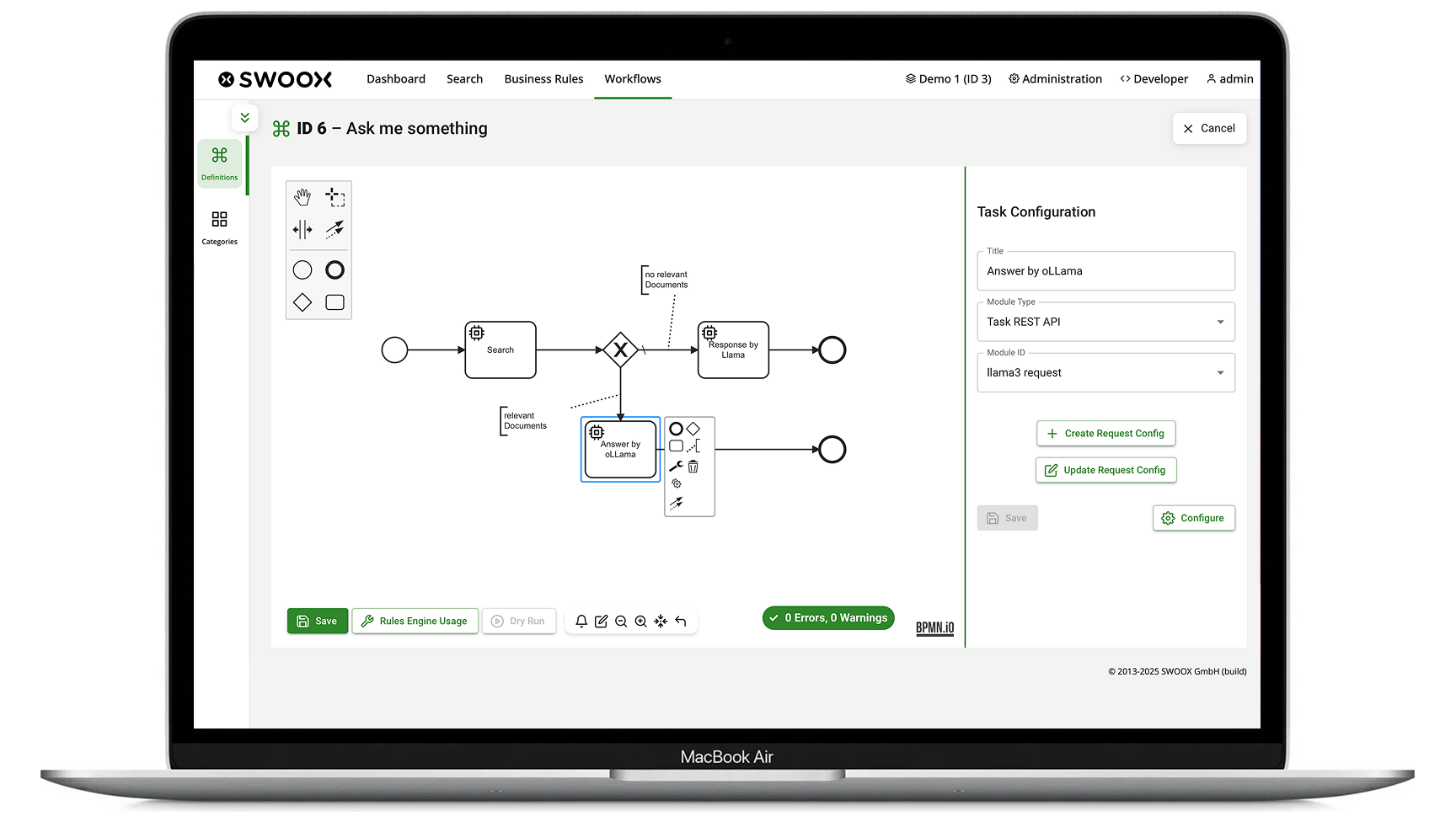

The entire process chain – from crawling to indexing to query processing – was orchestrated by swoox.io. The platform served as a central hub for all automation and integration tasks. - Answer generation with Ollama (local Large Language Model)

Relevant documents were identified through semantic search and passed to a locally hosted LLM (Ollama), which generated context-aware answers.

Example RAG Architecture with swoox.io

- Firecrawler: Crawls and extracts relevant content

- swoox.io: Orchestrates the entire workflow

- Vector search: Finds semantically relevant documents

- Ollama: Generates precise, context-aware answers

Why swoox.io? The Benefits at a Glance

- Low-code automation for complex AI workflows

swoox.io allows complex data pipelines and integrations to be built without deep programming knowledge. This accelerates development and significantly lowers the entry barrier for RAG projects. - Seamless integration of diverse tools

Whether Firecrawler, vector databases, LLMs like Ollama, or external APIs: swoox.io connects all components through standardized interfaces and event-driven workflows. - Scalability and reusability

Once created, workflows can be easily adapted, duplicated, and applied to other use cases. Ideal for companies operating multiple RAG applications or scaling their projects. - Transparency and monitoring

Integrated dashboards and logging features allow developers to always maintain an overview of the status and performance of their RAG pipelines. - Data sovereignty through local processing

Using local LLMs like Ollama ensures that data processing remains fully under internal control – a key advantage for data-sensitive industries such as healthcare, finance, or legal.

Typical Use Cases for Local RAG Systems

RAG systems with local data processing allow companies to safely combine generative AI with internal knowledge – without cloud dependency. Here are some concrete use cases:

- Internal knowledge bases & employee support

- Application: Automated answers to internal questions about processes, policies, IT systems, or HR topics.

- Benefit: Less support effort, faster onboarding, better self-service options.

- Compliance & legal departments

- Application: Analysis and interpretation of internal policies, contracts, or regulatory texts.

- Benefit: Reduced risk, better decision-making, support during audits.

- Product development & R&D

- Application: Access to internal research reports, patents, technical specifications.

- Benefit: Faster innovation cycles, better utilization of existing knowledge.

- Sales & marketing

- Application: Creation of personalized offers or presentations based on CRM and product data.

- Benefit: Higher conversion rates, better customer targeting, time savings.

- Industry & manufacturing

- Application: Support in maintenance, troubleshooting, and training through access to machine manuals and maintenance logs.

- Benefit: Reduced downtime, more efficient maintenance, better onboarding.

Key Advantages of Local RAG Systems at a Glance

| Advantage | Description |

| Data sovereignty | No sensitive data leaves the company – ideal for GDPR compliance. |

| Domain-specific knowledge | LLMs are enriched with company-internal knowledge for more precise and relevant answers. |

| Cost efficiency | Reduction of licensing costs through local models and open-source technologies. |

| Flexibility | New data sources or documents can be integrated easily. |

| Offline capability | Can be used in isolated networks – e.g., in industry or government agencies. |

Conclusion: swoox.io as an enabler for production-ready RAG systems

swoox.io makes implementing RAG applications not only easier but also more robust and scalable. The platform provides everything modern AI projects need: automation, integration, transparency, and flexibility. Companies that want to use RAG seriously in practice will find a strong partner in swoox.io.

We would be happy to show you how easy and flexible process automation with swoox.io is.

Or you can try it out for yourself right away.